I’ve spent some time thinking about how input is handled by my GUI library. One issue I didn’t cover in its initial design is that people might want to use the GUI library at the same time as their game is running (think of a command palette in a strategy game). I’ve already got some requests on the CodePlex forums (How to determine if a screen-position (i.e. mouseclick) is on any gui-control ? and Unfocusing from GUI) and it’s about time I did something about this.

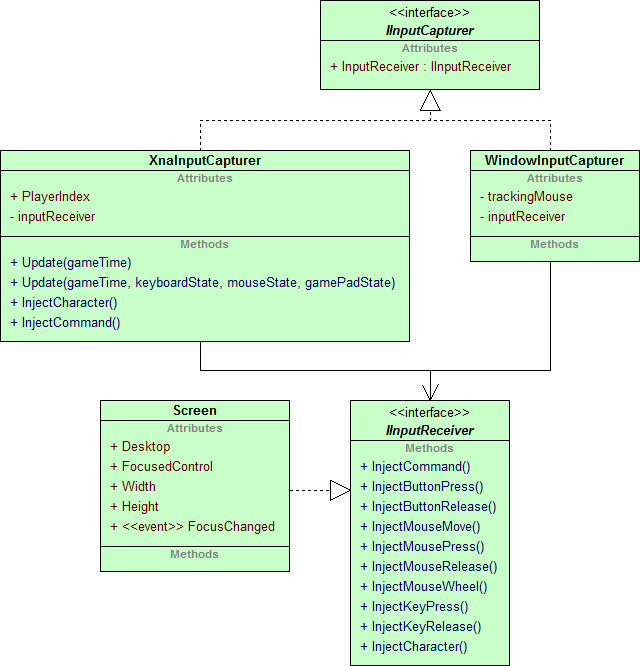

In the old design, an IInputReceiver was fed by one of

two classes: one was the XnaInputCapturer which relied

completely on XNA’s input device classes (Keyboard,

Mouse,

GamePad)

to track the status of any input devices, the other was the

WindowInputCapturer which intercepted incoming window

message for XNA’s main window to obtain the status of input devices:

This wasn’t even nearly an ideal solution because now I would have

to copy & paste the game pad polling code from the

XnaInputCapturer to the WindowInputCapturer

if I wanted game pad input on Windows. The new design should fix this,

but still follow the concept of routing all input through a single

interface (IInputReceiver) to allow users to easily

attach the GUI to their own input handling code.

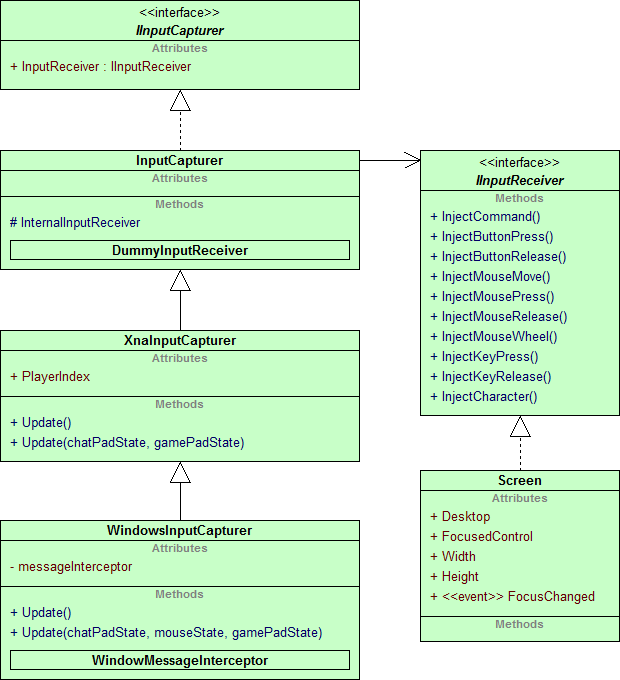

As a first step, I turned the XnaInputCapturer into

a shared base class and made the WindowInputCapturer

derive from it. I also removed all mouse polling code from the

XnaInputCapturer because this is either completely

replaced by window messages or done purely through the XNA

Mouse class (if you can attach a mouse to the XBox 360,

I’ll have to see if this works). I kept the keyboard polling code,

however, because of this little gem:

It’s a chat pad and it can be attached to an XBox 360 controller,

meaning it can be connected to the PC as well and needs to be

polled via the XNA Keyboard class (because, being an

XINPUT device, it doubt it will just become a normal keyboard in

Windows — anyone can confirm this?).

So as an intermediate design step, the trunk build of my Nuclex Framework now uses this improved design:

Before I begin modifying the GUI input response code to make it easier for the GUI to coexist with gameplay running at the same time, there remain two things to solve:

-

First is the design recommendation that XNA games on the XBox 360 should poll all connected input devices at the title screen and make the one on which is used first the primary device (the idea being that a player could have several game pads attached and just pick up a random one to start playing).

Switching to one input device is already supported by the current design through the

PlayerIndexproperty in all input capturers, but I want to see if I can support it better. -

Second is the integration of DirectInput devices. Before I bought my XBox 360 last year, I only had a normal game pad that didn’t support XINPUT, so it was out of the loop for XNA.

I want to seamlessly integrate standard game pads and joysticks with XNA. There’s already some DirectInput code out there and I’ve done a full-featured DirectInput-based input manager in the past, so this shouldn’t be too difficult.

I’m even thinking whether it makes sense to pull all that input handling stuff out of the Nuclex.UserInterface library and put it into a separate library (Nuclex.Input or so). This would allow me to provide event-based input, perhaps even a multi-threaded polling solution at a later date, together with support for standard joysticks and game pads for XNA.

John Sedlak had something similar in his XNA5D library (I think it’s now been renamed to “Focused Games Framework”) and I remember it being used successfully by some developers. Haven’t tried it myself so far because I wasn’t sold on the design (I hate message objects being passed around with a passion :D). The idea is sound and useful, though!

Expect some goodness soon! As always, feedback is very welcome ;-)