Sometimes, games have to render highly dynamic geometry such as sparks, bullet trails, muzzle flashes and lightning arcs. Sometimes it’s possible to off-load the work of simulating these things to the GPU, but there are effects than can’t be done by the GPU alone.

These cases usually occur when effects require heavy interaction with level geometry or when they require lots of conditionals to mutate a persistent effect state. And sometimes, the effort of simulating an effect on the GPU is just not worth the results. If you have maybe a hundred instances of a bullet trail at once, letting the GPU orient the constrained billboards for the trails instead of generating the vertices on the CPU might just not yield any tangible benefits.

However, there are still a lot of traps you can run into. A typical mistake of the

unknowing developer is to render the primitives one-by-one either using one

Draw[Indexed]UserPrimitives() call per spark/trail/arc.

This is not a good idea because modern GPUs are optimized for rendering large numbers

of polygons at once. When you call Draw[Indexed]UserPrimitives(), XNA will

call into Direct3D, which will cause a call into driver (which means a call from code

running in user mode to code running in kernel mode, which is especially slow). Then

the vertices are added to the GPU’s processing queue.

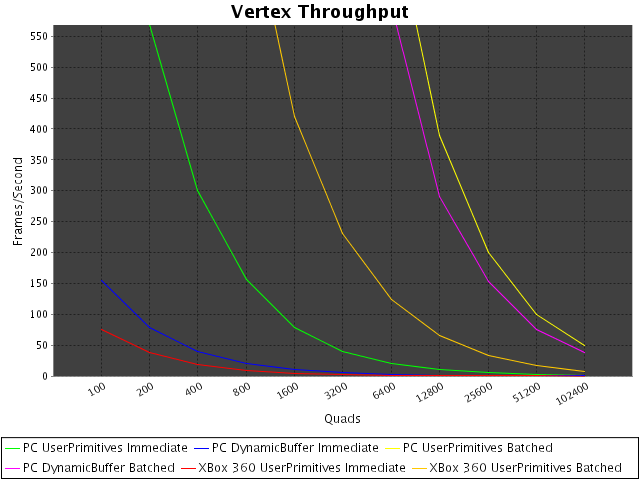

By sending single primitives to the GPU, this per-call overhead is multiplied and can become a real bottleneck. To demonstrate the effects of making lots of small drawing calls, I wrote a small benchmark and measured the results on my GeForce 8800 GTS 512 and on whatever the XBox 360 uses as its GPU.